John McCutcheon![]() 1, Seonhong Hwang

1, Seonhong Hwang![]() 2,3, Chung-Ying Tsai

2,3, Chung-Ying Tsai![]() 2,3, and Alicia M. Koontz

2,3, and Alicia M. Koontz![]() 2,3

2,3

- Department of Biomedical Engineering, Robert Morris University, Pittsburgh, PA

- Human Engineering Research Laboratories, VA Pittsburgh Healthcare System, Pittsburgh, PA

- Department of Rehabilitation Science and Technology, University of Pittsburgh, Pittsburgh, PA

Introduction

Approximately 256,000 persons in the United States have been diagnosed as having a spinal cord injury (SCI) (NSCISC, 2012) of which many will rely on wheelchairs for mobility. Individuals with SCI are often in their wheelchair for 12 to 18 hours per day every day, using only their upper body as the main source of propulsion (Cooper, 2003). In addition to propulsion; transfers to and from the wheelchair to other surfaces such as the bed, commode, bathtub, car, etc are also required and rely heavily upon the upper limbs for moving the body from point to point. Therefore it's not surprising that a large percentage of wheelchair users with SCI experience shoulder pain and injury (Hogaboom, 2013). The repetitive movements and internal joint loading associated with transfers are believed to lead to secondary upper limb pain and injuries (Gagnon, 2008). In addition, transfers carry an increased risk of falls and fall-related injuries and deaths (Saverino, 2006) .

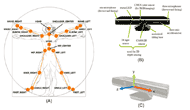

Figure 1. (A) All human joints that are tracked by Kinect (Clark et al., 2012); (B) Sensor components of Kinect; (C) Kinect coordinate system.

Figure 1. (A) All human joints that are tracked by Kinect (Clark et al., 2012); (B) Sensor components of Kinect; (C) Kinect coordinate system. Vicon 3D motion capture systems are widely used in biomechanical studies and provide highly-accurate kinematic data using passive reflective markers placed on specific anatomical landmarks. However, these systems are costly and the set up and post-processing procedures are complex and time consuming. The recently released Microsoft Kinect sensor is a low-cost and portable system that is able to detect human motion with minimum setup in real-time. Kinect provides full-body 3D motion capture and joint tracking capabilities, and permits total freedom in movement without holding or wearing any markers or specialized equipment. Kinect uses infra-red light and a video camera to 3D map the area in front of it and a randomized decision tree forest algorithm to automatically determine joint centers (Figure 1). The Kinect is not highly accurate due to being designed to detect 'gross' movement for the gaming industry however, many studies that have analyzed the data from the Kinect sensor suggest that the precision may be good enough for certain rehabilitation applications (Baena, 2012; Clark, 2012; 2013). The purpose of this study was to compare the upper limb joint motions during wheelchair transfers between the Vicon and Kinect to identify the possibility of using Kinect as a tool that could be used to help assess transfer skills.

methods

Subjects

The study was approved by the Department of Veterans Affairs Institutional Review Board. The inclusion criteria for the study were have a SCI at C4 level or below for at least one year; be over 18 years of age; have no upper extremity pain which would interfere with transfers; and be able to independently transfer.

Experimental Protocol

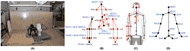

Figure 2. (A) Transfer assessment station with toilet setup; (B) Vicon markers (red circles) and the joint centers computed from Vicon marker data (black circles); (C) Overlay of Vicon markers (red triangles) and Kinect joint centers (purple circles) and (D) joint centers detected by Kinect.

Figure 2. (A) Transfer assessment station with toilet setup; (B) Vicon markers (red circles) and the joint centers computed from Vicon marker data (black circles); (C) Overlay of Vicon markers (red triangles) and Kinect joint centers (purple circles) and (D) joint centers detected by Kinect. After completing the informed consent process, the Kinect for Windows was mounted on a tripod in front of the subject approximately 2.5 meters away and 1 meter above the floor (Kinect for Windows, Human Interface Guidelines, 2013) and centered between where subjects placed their wheelchair and the transfer surfaces. Reflective markers were placed on anatomical landmarks on the subjects' upper limbs, trunk and pelvis and tracked by ten Vicon cameras surrounding the transfer station (Figure 2). Subjects were asked to perform the following transfer five times each transferring from a wheelchair to a commode. A simple graphical user interface was created to collect the three-dimensional joint centers from Kinect. The program was developed in C# using Visual Studio 2012, .NET framework 4.0, and Kinect SDK version (1.0.3.191). Kinect sensor and Vicon data were recorded simultaneously at 30 Hz and 120 Hz for the duration of each transfer respectively.

Data Analysis

The quaternion method was used to compute the bilateral shoulder abduction/adduction, shoulder flexion/extension, elbow flexion/extension, wrist flexion/extension ranges of motion. The joint angles were determined from the 3D joint center positions from the Kinect and from 3D joint centers that were derived from the marker data in the Vicon system. To determine the shoulder angles, vectors were drawn between shoulder left and elbow left joint centers and the shoulder center and spine center. The relative angle between the two vectors: v1 (distal or child segment) and v2 (proximal or parent segment) was determined using the following equation:

θ = arccos((v1*v2)/(||v1|| * ||v2||))

The axis of rotation is determined from the cross product of the vectors:

u = ((v1 x v2)/(||v1 x v2||))

Finally, four elements of quaternion were established using the following equation:

Q = (cos (θ/2), u sin (θ/2)); where, u = (0, u![]() x, u

x, u![]() y, u

y, u![]() z)

z)

The same method was used to calculate the elbow and wrist joint angles. To determine the trunk flexion/extension angles, vectors were drawn between the neck and spine centers and the global vertical frames of references and the relative angle in between was determined. The trunk torsional angle was defined as the angle between a vector joining both shoulder joint centers and the global horizontal axes of the Vicon and Kinect.

Pearson’s correlation coefficients and root mean square errors of the time series upper limb joint angles between the Kinect and Vicon were computed. Mean peak angles and z-scores for each of five trials were computed to investigate the within-subject reliability.

results

Two male subjects participated in this pilot study. Subject 1 was age 21, SCI level complete T9-12, and five years post SCI. Subject 2 was age 28, SCI level complete L2-3, and 19 years post SCI.

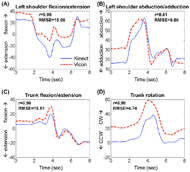

Figure 3. Examples of joint angles during the transfer to the toilet for Subject 1, (A) left shoulder flexion/extension, (B) left shoulder abduction/adduction, (C) trunk flexion/extension, (D) trunk rotation angles (solid line: Kinect; dotted line: Vicon).

Figure 3. Examples of joint angles during the transfer to the toilet for Subject 1, (A) left shoulder flexion/extension, (B) left shoulder abduction/adduction, (C) trunk flexion/extension, (D) trunk rotation angles (solid line: Kinect; dotted line: Vicon). Moderate to high correlation coefficients between the Kinect and Vicon were found for the shoulder angles in sagittal and frontal planes (r=0.54~0.85) except for the sagittal angle of subject 2 (r=0.34). The elbow joint angles also had moderate to high correlations (r=0.47~0.90) while the wrist joint angle had very low to moderate correlations (0.09~0.51) for both subjects. Trunk angles of both subjects had high correlation coefficients for both sagittal and rotational motion (r=0.80~0.96).

The mean RMSEs for the shoulder angle (sagittal and frontal) ranged from 10.57 to 20.84 degrees. The mean RMSEs for the elbow angle (sagittal) ranged from 16.95 to 21.24 degrees, those of the wrist angle (sagittal) ranged from 23.91 to 48.09 degrees, and those of the trunk angle (sagittal and horizontal) ranged from 5.46 to 21.22 degrees (Table 1). While large differences were found for some of the angles, these differences were consistent within a subject and across the five trials. The Kinect and Vicon peak angles for each trial were within one standard deviation of the mean measured by each system (z-score data in Table 2). Kinect data tracked well the general pattern of upper limb and trunk motion (Figure 3).

|

Joint motion |

Leading (left) arm |

Trailing (right) arm |

||

|---|---|---|---|---|---|

R |

RMSE |

R |

RMSE |

||

1 |

shoulder flexion/extension |

0.56 (0.39) |

16.10 (5.11) |

0.85 (0.07) |

12.48 (3.74) |

shoulder abduction/adduction |

0.75 (0.15) |

10.57 (2.04) |

0.68 (0.13) |

20.84 (4.49) |

|

elbow flexion/extension |

0.47 (0.37) |

17.88 (3.62) |

0.90 (0.04) |

17.18 (7.26) |

|

wrist flexion/extension |

0.51 (0.11) |

33.02 (7.13) |

0.23 (0.37) |

23.91 (4.99) |

|

Trunk flexion/extension |

0.94 (0.02) |

13.44 (3.68) |

- |

- |

|

Trunk rotation |

0.91 (0.05) |

5.46 (0.78) |

- |

- |

|

2 |

shoulder flexion/extension |

0.34 (0.20) |

19.40 (2.60) |

0.54 (0.18) |

10.99 (1.41) |

shoulder abduction/adduction |

0.74 (0.21) |

14.51 (4.36) |

0.78 (0.20) |

17.35 (2.90) |

|

elbow flexion/extension |

0.60 (0.25) |

16.95 (2.46) |

0.67 (0.18) |

21.24 (2.93) |

|

wrist flexion/extension |

0.09 (0.21) |

48.09 (4.76) |

0.31 (0.21) |

30.56 (1.49) |

|

Trunk flexion/extension |

0.80 (0.10) |

21.22 (2.17) |

- |

- |

|

Trunk rotation |

0.96 (0.01) |

4.79 (1.32) |

- |

- |

|

|

|||||

discussion

The results of this study suggest that the trunk, shoulder, and elbow can be monitored well with the Kinect system. Despite large magnitude differences between the two systems, inter-trial reliability for each subject was similar between the Vicon and Kinect. Moreover, the Kinect appeared to track the movement patterns of the shoulder, elbow and trunk well based on the moderately to highly correlated data when the Kinect motion curves were compared to the Vicon curves. When observing the plots of the Kinect and Vicon joint angle patterns it can be seen that there are times during the trial where the patterns line up well and other times where large magnitude differences occur. The maximum difference of the joint angle between the Vicon and Kinect was identified at about 24 degrees, and the highest error was observed in the wrist joint angle. These discrepancies could be due to the complexity of tracking upper body motions in a seated position and while the body is also pivoting towards and away from the Kinect sensor. The discrepencies may also be attributed to the differences in the joint center calculations between the Vicon and the Kinect. It’s possible that as the Kinect continues to improve with each software and hardware release, which increases its ability to accurately track and detect the joints centers, the results will improve. A new system with much higher resolution and joint tracking capabilities is expected to be released early in 2014.

Future work is needed to understand better the position of the body at the time that larger error differences occurred and determine if making adjustments to the Kinect sensor location or adding another Kinect sensor could help improve the errors. The next step after testing the reliability is to compare the Kinect data to a quantitative clinical measure of transfer technique and determine if the two measurements are also correlated. If so, then Kinect may be able to discern a ‘proper’ technique from a ‘poor’ one. A future goal related to this project is to use the Kinect to develop an interactive transfer training system that is low cost, practical, and easy to use.

Conclusions

The results of this study suggest that the shoulder, elbow, and trunk joints can be monitored well with the Kinect system. The Microsoft Kinect has great potential to be used to track transfers in a clinical environment because it is portable, markerless, and inexpensive. Although the Kinect does not have as high of accuracy compared to the VICON system, the differences detected between the two systems were consistent across trials within a subject. In time, the accuracy of the Kinect will continue to increase as new hardware and software become available. Future work will determine if it can become a helpful device for the assessment of transfer skills and for guided practice both in the clinic and in the home.

References

R.A.Cooper, M.L. Boninger, R. Cooper, T. Thorman (2003). Wheelchairs and Seating. Ch. 46, pp. 635-654

Hogaboom, N., Fullerton, B., Rice, L., Oyster, M., & Boninger, M. (2013). Ultrasound Changes, Pain, and Pathology in Shoulder Tendons after Repeated Wheelchair Transfers. Retrieved from http://resna.org/conference/proceedings/2013/Wheeled%20Mobility/Student%20Scientific/Hogaboom.html

Gagnon, D., Nadeau, S., Noreau, L., Dehail, P., & Piotte, F. (2008). Comparison of peak shoulder and elbow mechanical loads during weight-relief lifts and sitting pivot transfers among manual wheelchair users with spinal cord injury. J Rehabil Res Dev, 45(6), 863-873.

Saverino, A., Benevolo, E., Ottonello, M., Zsirai, E., & Sessarego, P. (2006). Falls in a rehabilitation setting: functional independence and fall risk. Eura Medicophys, 42(3), 179-184.

Baena, A. F., Susin, A., & Lligadas, X. (2012). Biomechanical validation of upper-body and lower-body joint movements of Kinect motion capture data, Intelligent Networking and Collaborative Systems (INCoS), 2012 4th International Conference.

Clark, R. A., Pua, Y., Fortin, K., Ritchie, C., & Webster, K. E. (2012). Validity of the Microsoft Kinect for assessment of postural control. Gait and Posture, 36, 372-377.

Clark, R. A., Pua, Y., Bryant, A. L., & Hunt, M. A. (2013). Validity of the Microsoft Kinect for providing lateral trunk lean feedback during gait retraining, Gait & Posture, 38(4):1064-1066.

Kinect for Windows, Human interface guidelines v.1.8, 2013. http://go.microsoft.com/fwlink/?LinkID=247735

Acknowledgements

The contents of this paper do not represent the views of the Department of Veterans Affairs or the United States Government. This material is based upon work supported by the Department of Veterans Affairs (A4489R) and the National Science Foundation, Project EEC 0552351.

The contents of this paper do not represent the views of the Department of Veterans Affairs or the United States Government.

Audio Version PDF Version